Ask the Artist: Emonee LaRussa, Motion Graphics Artist

The 2x Emmy Award-winner on working with artists like Lil Nas X, Schoolboy Q, Tyga, and Kanye West, storyboarding, and why she turns to Mocha Pro.

The team at Wisdom VR discusses why 360 filmmaking provided the most in-depth look at what it was truly like on the front lines of the pandemic.

The small team at WisdomVR is currently nominated for an Emmy (Outstanding Interactive Program) for their emotional and awe-inspiring documentary Inside COVID19.

We recently caught up with Gary Yost, producer/director (and one of the inventors of 3D Studio Max), and David Lawrence, 360 post-production artist, to talk about the technology that goes into 360 storytelling, the challenges it presents, and why they rely on tools like Boris FX Mocha Pro.

Gary: From the beginning of time, humans have been storytellers. In fact, storytelling is a big part of what actually makes us human. And in telling stories the goal has always been to immerse the listener or viewer as deeply into the story as possible. We recognized a few years ago that the stereoscopic 360/VR format if harnessed in the best possible way (i.e., comfortable stereo imaging, 16-channel spatial audio, etc.) was the ultimate way to put a viewer inside the story.

It then took years of experimenting to work out our technical pipeline to produce world-class stereoscopic imagery, and even more important to develop a storytelling style that uses locations in a way that drives the story deep into the mind of the viewer… deeper than absolutely any other medium can. For those of you who don’t own a VR headset, you’ll have no idea of what I’m talking about because this is an experiential phenomenon. It’s not something that can be described.

Gary: Our first meeting was in May 2020 and we released the film in November 2020, so six months in total. Those first two months were spent writing proposals and trying to get funded, and once Eric Cheng, our executive producer at Oculus, found a bit of cash for us to get going in mid-July, we were off and running. So in reality it only took four months of actual work.

The biggest challenge was creating seven minutes of 6720x6720 3D stereoscopic animation inside the molecular world, and our brilliant animator Andy Murdock was supercharged by a significant grant of rendering time from the Chaos Group so we could use their Chaos Cloud Vray renderer. With the support from Chaos, this almost superhuman task would’ve never been possible. Remember that an HD frame is 2 megapixels but a 7K stereo frame is almost 50 megapixels!

Inside COVID19 virion rigging and rendering example

Gary: “Extremely technical” is right, particularly when it comes to stereoscopic 360 video at 7k x 7k resolution. And even more so when you’re super discerning about stereoscopic imaging quality. Because of my background in 3D animation, and due to the fact that with CGI it’s possible to create physically-perfect stereoscopic imagery, my standards for stereo 360 imaging are extremely high. Before 2018 the only commercially-available stereo 360 rigs that provided enough overlap to algorithmically generate a high-quality stereo image were the Jaunt One and Yi Halo, which were built to use the Google JUMP stitching assembler.

Those cameras were out of our price range (and soon thereafter Google discontinued the JUMP assembler), but luckily the ZCAM folks (who made the $34,000 V1 Pro, two of which are now orbiting Earth on the ISS) were experimenting with a lower-cost $9,000 10-camera rig with a small interocular distance and a tremendous amount of overlap, providing a lot of flexibility for the optical flow algorithm used by our stitching software (Mistika VR) to derive naturalistic equirectangular image pairs. That camera is so good at stereo imaging that it’s the key enabling tech for how our nonprofit is able to create world-class stereoscopic 360 video.

There’s still no camera available that can compete with it in terms of naturalistic stereo, particularly for getting close to people and in tight rooms. The great war photojournalist Robert Capa used to say “If your pictures aren’t good enough, you aren’t close enough,” and that maxim has been a prime directive throughout my life as a photographer. My interpretation of Capa’s quote is that it’s important to get viewers emotionally invested in the character to get them to really pay attention. Unfortunately, the medium of 360/VR video involves some crazy optical flow interpolation of the image field to create stereo pairs and without tons of overlap between each camera, it’s impossible to accurately stitch a subject close to the camera. That’s why you’ll see all sorts of stitching issues with most stereo 360 videos or the subjects are two meters or more from the camera. This isn’t true with the ZCAM V1…we can put people inches away from this camera and the effect in headset is that the subject becomes huge, creating a hyper-emotional feeling for the viewer.

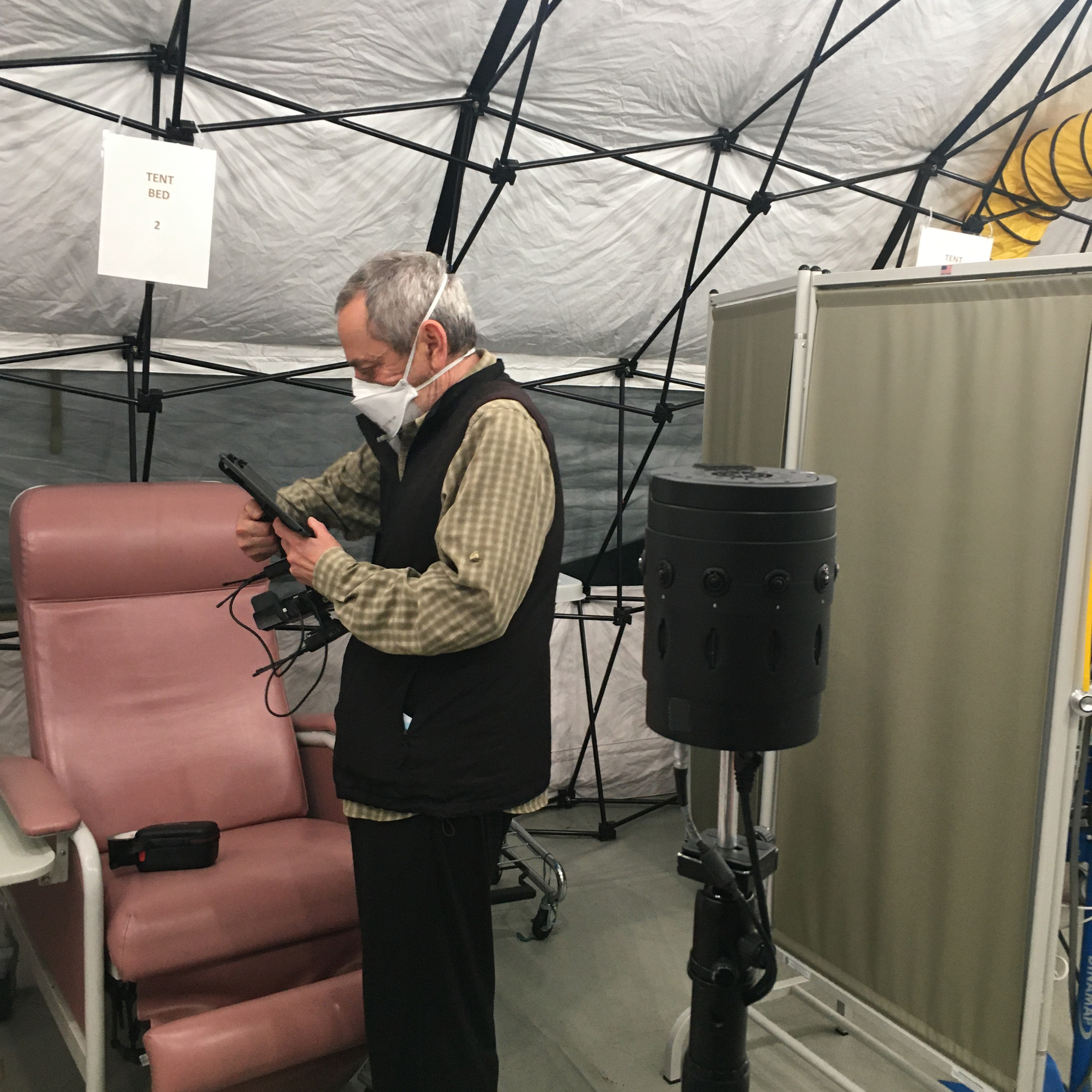

Inside COVID19: Gary Yost on set

Inside COVID19: Gary Yost on set

Sadly ZCAM only made twenty V1 cameras before shutting down their hardware experiment but we fortunately own two of them. Regarding software, in addition to SGO’s Mistika VR stitcher, Mocha Pro and After Effects make up the core suite of essential VFX tools. We use both Premiere and Final Cut Pro X at different phases of the post production process, and ffmpeg is used heavily for file transcoding and encoding. We made over 850 separate shots during Inside COVID19’s production phase and stitched all of them at proxy 4k x 4k resolution. Once we had a locked edit I’d restitch at 7k and then move to AE/Mocha Pro for the rig removal process.

Regarding rovers, we didn’t move the camera at all for this project for a few reasons. In general we don’t believe in gratuitous camera moves in 360/VR because 3DoF camera moves can cause ocular/vestibular dissonance in many viewers and make them nauseous, but also there was no particular reason for camera moves in order to tell our story. We did experiment with a stereo 360 hyperlapse sequence near the end of the film and nobody’s complained about getting sick yet.

Sound is just as important as picture, and there are many tools we use for the 16-channel third order ambisonic spatial mix because original music (beautifully scored by James Jackson) and sound design is a massive part of our workflow. I do spatial mixes in the Reaper DAW along with Noise Industries’ Ambi-Pan and Ambi-Head spatial audio plugins. Charles Verron of NI actually wrote a custom plugin for us to smoothly interpolate certain SFX between head-locked and spatialized tracks. I previously only had mixed in 4-channel first order spatial format, so Inside COVID19 was my first time putting the extra effort in to mix such a high-resolution spatial sound field. Listening to Inside COVID19 with headphones tremendously enhances the immersiveness of the piece.

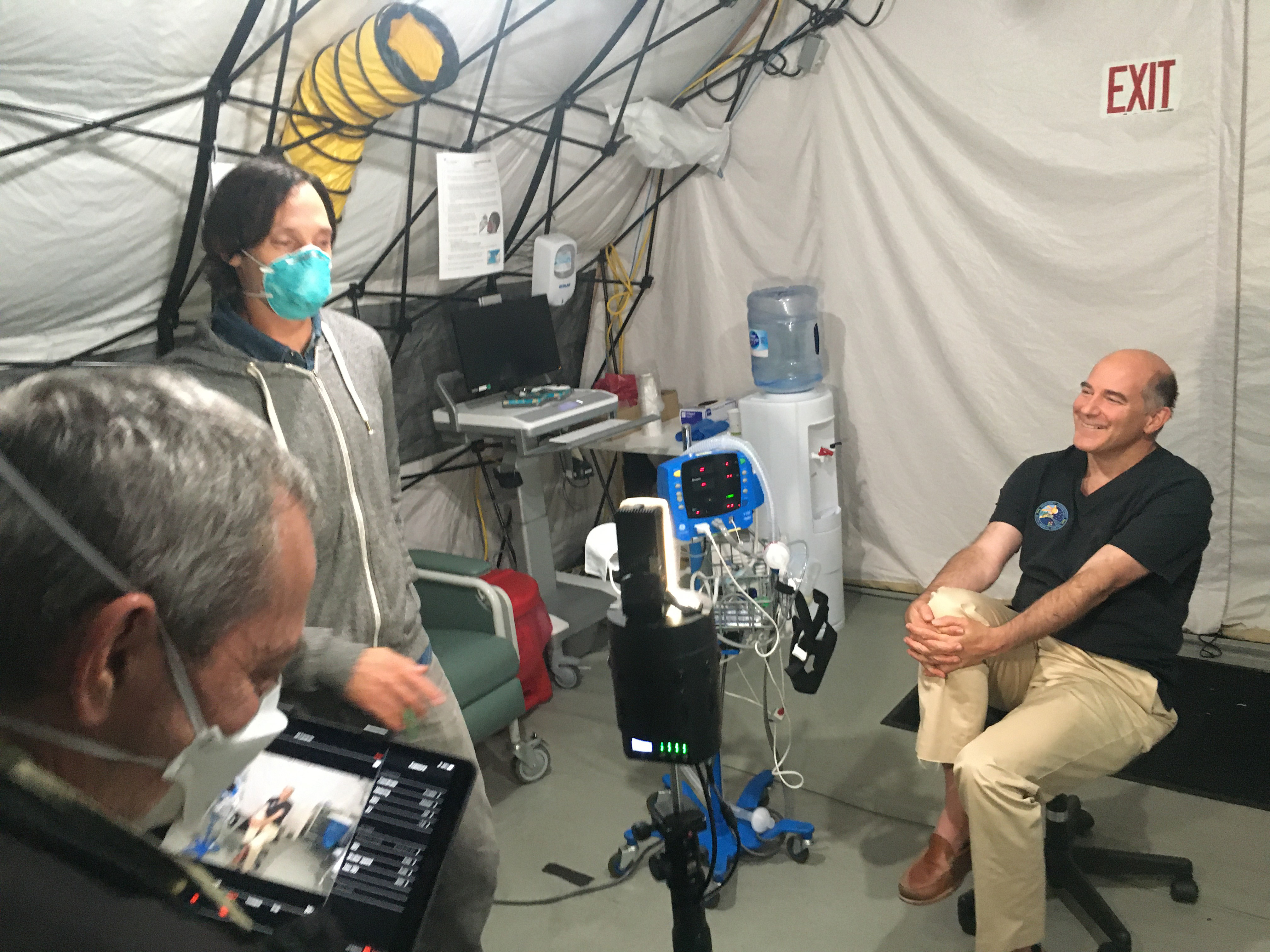

Inside COVID19: L-R, Gary Yost, Adam Loften, Dr. Child

Inside COVID19: L-R, Gary Yost, Adam Loften, Dr. Child

Gary: All of our work is produced in stereoscopic 360/VR because the feeling that you get from a stereo image is infinitely more immersive than a monoscopic wallpaper-like image. In many cases the videos you’ll watch are made by 360/VR producers who don’t have access to a V1 or V1 Pro. The stereo just feels “off” and creates uncomfortable eye strain.

The V1’s radial array of 10 cameras produces a huge dataset, but of course the corollary is that managing 10 cameras with 850 shots is nearly 10,000 video files…daunting but doable, which is why many stereoscopic 360/VR films are nowhere near as long as our 35-minute runtime on Inside COVID19. And making final stitches at 49 megapixels per frame in Prores format… you can imagine how fast all that adds up to many terabytes of data. In fact our final production archive drive clones are 16Tb each. Insane.

Add to that the extra post time for doing all the rig removal and compositing for 49Mpx frames… I bought a tricked out 2019 Mac Pro for this and used that along with my stitching PC and an iMac Pro, plus Adam Loften (co-director) and David each had multiple machines at their studios. Thank heavens for file sharing tools like Dropbox.

David: The most challenging shots for rig removal were taken with the camera mounted to the passenger seat of a car. The ZCAM V1 is the only commercially designed VR camera that one could even attempt something like this with and it’s still hard to pull off. The first step is getting a good stitch and optimizing the stitch for the remove. This means forcing the nadir and zenith to be mono as best as possible. Gary did the stitching Mistika VR and did great work setting up these shots.

Once this is done I’m ready to go into Mocha. The key to these shots is making separate clean plates for the left and right eyes. Mocha can automatically generate a right eye view from the left eye for stereo removes, but it doesn’t work at the nadir or zenith because “left” and “right'' doesn’t really make sense at the poles. There’s no way to know the orientation of the viewer depending on the direction they’re looking, when they look up or down, the eyes may be reversed. So I’ll start by making a left eye clean plate in Photoshop, then copy my left eye patch onto a right eye plate and carefully blend it in. I look for places that need to be in stereo around the edges and feather in the right eye plate to help sell the shot. I’ll check in the HTC Vive as I go and tweak until it’s right.

When I’ve done my job well, my work is invisible. By far the hardest shot was with the car moving in bright overhead sunlight. This caused vibration and constantly moving shadows throughout the shot. Once I was able to find a texture co-planar to the seat that would solidly track, the remove was pretty straightforward. With good cleanplates it totally worked! I really can’t imagine how I’d do this without Mocha. Read more about how Wisdom VR used Mocha Pro on Inside COVID19.

Gary: That I get to do it! And that I get to push myself to do new things on every project. I’ve learned so much about directing from my co-founder Adam Loften, and it’s that process of learning which makes me feel most excited about this work.

David: I love how every job is different and presents completely new challenges that force me to learn, push my abilities and techniques, and keep growing. And I love being part of a team where we’re always inspiring each other to do our best work. It’s so satisfying when all the pieces come together so that nothing gets between the story and the viewer. That’s the magic of this medium.

The 2x Emmy Award-winner on working with artists like Lil Nas X, Schoolboy Q, Tyga, and Kanye West, storyboarding, and why she turns to Mocha Pro.

Creating invisible effects on shows like This is Us and Modern Family, teaching the next gen, and using Sapphire, Continuum, and Mocha Pro.

Find out how the award-winning artist got his start, where he gets creative inspiration, and why he relies on Sapphire & Continuum inside After...

Be the first to know about new product releases, exclusive offers, artist profiles, tutorials, events, free products, and more! Sign up for our weekly newsletter.