Quarterbacking Sports Promos with Sapphire and Mocha Pro

Artist C.M. De La Vega shares his motion graphics/VFX playbook and how he helps coach artists looking to score creative touchdowns in After Effects.

Artists are still very much needed and will be as more virtual productions like The Mandalorian ramp up.

Once again Industrial Light and Magic rocks the world of visual effects with the production of The Mandalorian using the new virtual production technology. It has sent shock waves through the VFX community and worries many of my fellow compositors about the future of our jobs. After all, shots that would have normally been done as a green screen are now captured in camera without compositing. Over 50 percent of The Mandalorian Season 1 was filmed using this new methodology and this scares the pudding out my fellow pixel practitioners. As of this writing there are 10 virtual sets in production with roughly another 100 under way all around the world. Is this the end of our careers? Are we soon to be as obsolete as CRTs? Will industry standard visual effects tools like Nuke, Resolve, Silhouette or Mocha Pro go the way of the Dodo? The short answer is "no!” The long answer is "hell no!”

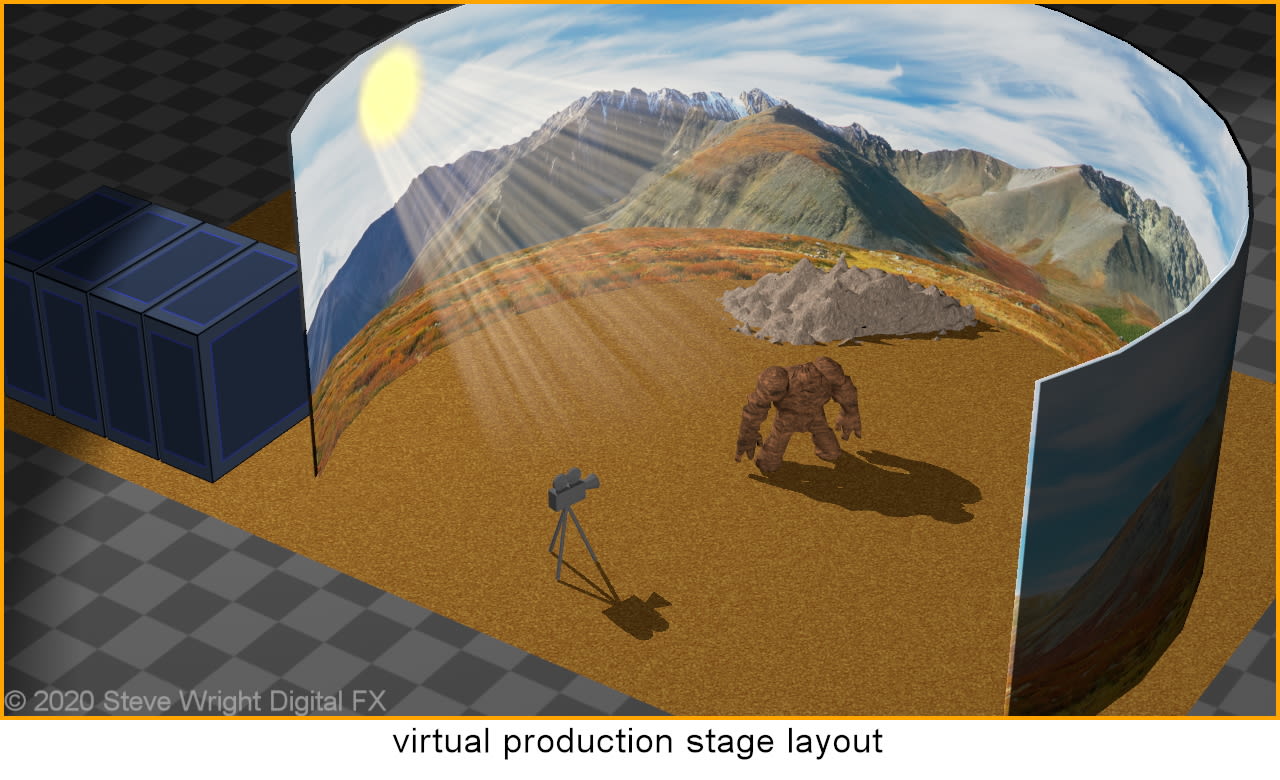

We can think of the virtual production stage as a massive update of the old rear projection technique — with computers. A series of major technologies have converged — camera tracking, LED light panels, interactive database management, optimized rendering algorithms, massively parallel real-time rendering — to focus on the single goal of capturing the finished visual effects shot in camera.

Built by ILM StageCraft, The Mandalorian virtual production stage utilizes an immersive environment where the talent is surrounded by a huge LED wall as illustrated above. It is 20 feet tall with a 75 foot diameter performance space which wraps 270 degrees around the stage. Other virtual stages may be smaller, not wrap a full 270 degrees, or may even simply be two or three flat walls rather than an arc. The LED walls are driven by multiple synchronized Unreal Engines1 running at 4k or 8k resolution, each responsible for a portion of the wall.

The camera is tracked in real time so its location and orientation are always known. The camera position is continuously transmitted to the Unreal Engines which render the VR environment in real time. As the camera moves on-set the virtual environment on the LED walls move in perfect sync complete with proper perspective and parallax shifts relative to the on-set talent.

It takes a staggering amount of engineering to make this all work together so there is also an on-set "brain bar" of artists, engineers, and technicians manning workstations connected to the overwhelming computing power and high speed disk arrays. Some are responsible for loading and managing the VR environment, others monitor the Unreal Engine performance, while others are lighting specialists for the LED light panels containing high performance RGB LEDs. It is as if the on-set talent was surrounded by a gigantic wraparound LED monitor that is operated by a tech team and orchestrated by the director.

The three main promises of virtual production are to see the final scene at the time of capture, in-camera compositing, and interactive lighting. Directors want to see the final shot immediately rather than have to wait to see their green screen shots until after a compositor has keyed it in post. Producers love the in-camera compositing so they don’t have to pay compositors for those green screen shots. Producers also love relighting the stage by having the brain bar twiddle a few knobs instead of having to pay gaffers and lighters to spend hours moving lights around, and directors love the ability to place lights wherever their creative whims dictate unfettered by reality.

The LED wall actually lights both the set and the talent with light from the VR environment as if they were on location and even includes reflections of the environment on shiny surfaces. It's as if the stage and talent are actually inside the VR world — almost. More about the "almost" in a minute.

Another advantage is better performances. The on-set talent will actually see the T-Rex tearing after them in real-time and react accordingly rather than simply imagining the beast is upon them like with a green screen shot. Less acting, more reacting. It also offers other performance advantages such as the ability to get the eye line right instead of just looking at a pair of ping pong balls on a green stick.

Another big win is the ability to make spontaneous on-set creative decisions. The director gets an idea for a new angle, but there is a tree in the way. No problem. The CG database wrangler simply moves the tree out of the way. Similarly, the lights can be moved around, increased or decreased in brightness, or the sky can be made brighter, darker, or redder — all in a matter of seconds without stopping production to relight the set. The talent can just stand there while the lighting is dialed in so everything can be seen in context. Directors just love this kind of God-like creative freedom. Gosh, who wouldn't?

Another big win is how quickly the next shot can be set up. Rather than breaking down a practical set or physically moving the crew to another location the database wrangler simply punches up the next scene from the database while the set dressers replace the stage floor and any on-set props. The production could move from desert to forest in an hour without leaving the virtual stage. This provides unheard of production efficiency and shortened schedules that lowers production costs.

This all sounds utterly fantastic. It's a whole new day in visual effects production where jaw-hanging VFX shots are captured in-camera in real-time far faster than classic green screen production or by building huge and expensive sets. At least that is what it seems at first glance. But a closer look reveals that reality is messier than that and there is still plenty of need for skilled compositors with this new tech.

Like any technology, virtual production has its own set of issues, limitations, and problems. So here we will take a closer look at those issues and even see where we might get more compositing work to fix them. Remember, compositors do much more than composite green screens. We have the tools and techniques required to fix virtually anything that goes wrong with principle photography, including virtual production shots.

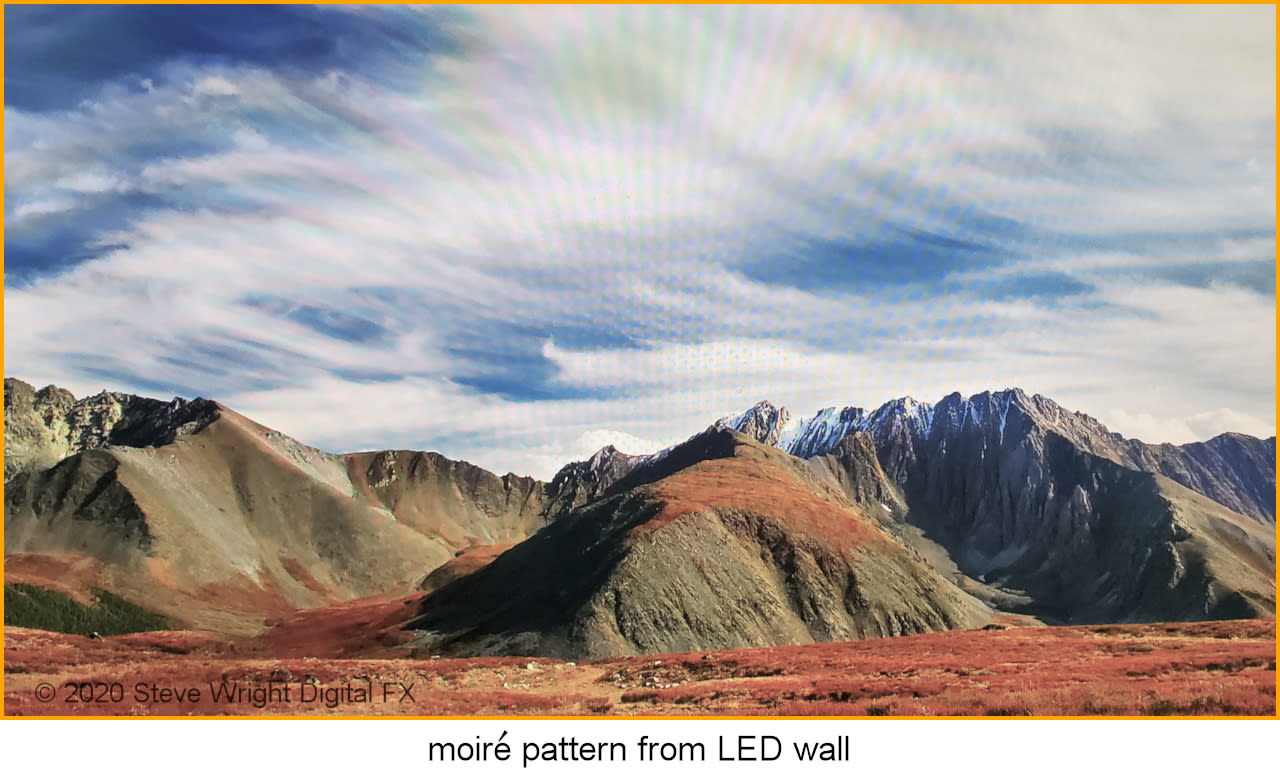

Moiré - One challenging problem is illustrated above which is the moiré pattern that can erupt in the VR footage. This occurs because the LED's in the wall can be roughly the same size as the pixels in the camera's imaging sensor when seen through the camera lens. You would not see this with your eyes standing on the set any more than you do when looking at an LED monitor. However, to see what a digital camera sees when photographing an LED wall try looking at a picture on your monitor through your cell phone camera from around 6 to 12 inches away and see what happens.

The simplest solution is to always have the video wall out of focus due to depth of field. However, that solution imposes constraints on scene composition and camera lenses that directors don't like. Various virtual stage builders have other solutions, some better than others, but it is still an issue to be addressed. I do hope you never get a moiré shot to fix in post.

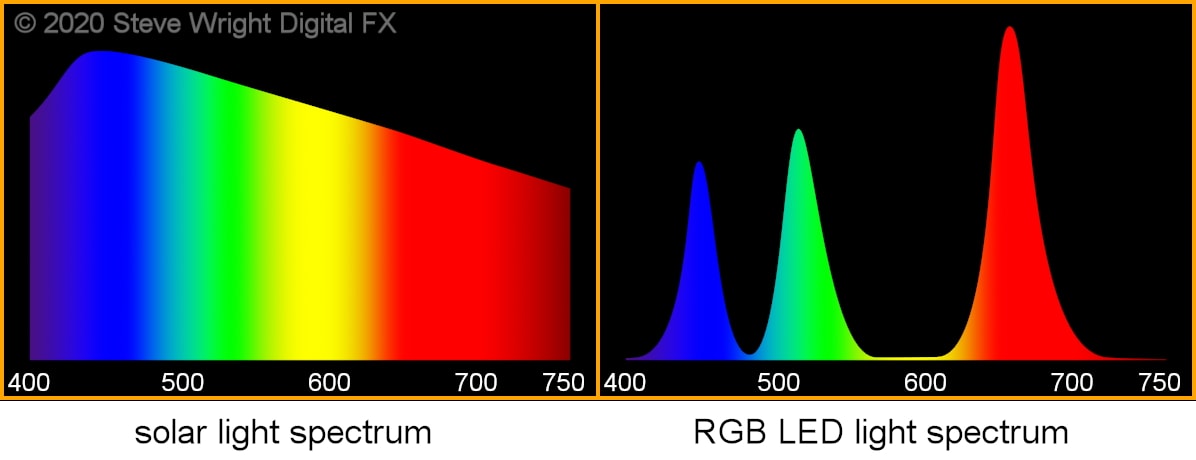

Metamers - The color of costumes and props can look fine under daylight, incandescent and even HMI lights, but when lit by the RGB LEDs of the LED wall their colors can suddenly shift due to metamerism. Why this happens is explained by the difference between the solar light spectrum and the RGB LED light spectrum illustrated above. The sun, incandescent, and HMI lights output a continuous spectrum of light — light at all wavelengths. The LEDs, however, put out "spikes" of colored light only at specific narrow frequencies. When these two different types of light spectrums reflect off of the same surface they can produce somewhat different colors. This can introduce vexing color correction problems that have to be fixed in post.

Photo courtesy Unreal Engine

Photo courtesy Unreal Engine

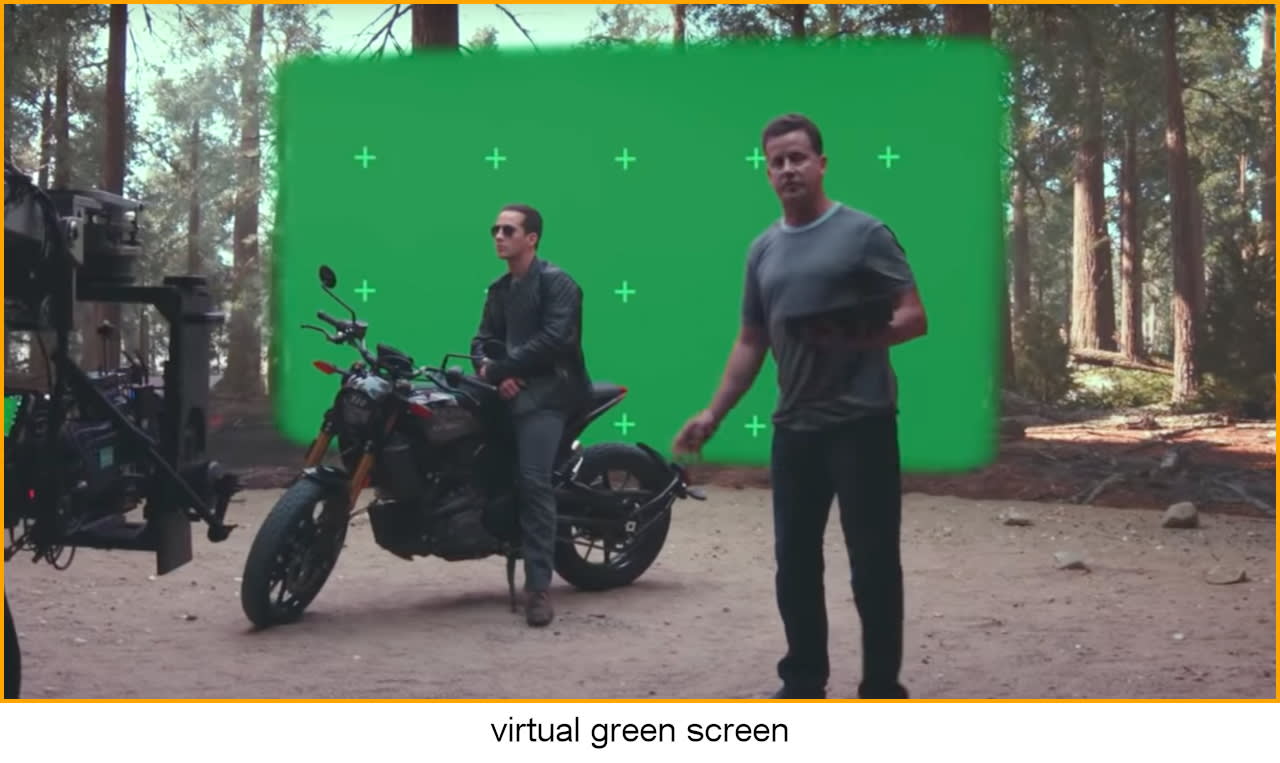

Virtual Green Screens - When all else fails on the virtual set they can program the LED wall to display a green screen like the one shown here. It tracks wherever the camera is looking so it is always behind the talent regardless of the camera move. Complete with tracking markers, a talented compositor can still turn it into a fine shot in post.

Practical Floors - The virtual set invariably has a practical floor such as a sandy desert or leaf-strewn forest that runs up to the edge of the LED wall. Very often the seam between the wall and floor has to be removed in post. More seriously, the practical floor must be a perfect color match to the VR environment all the way around the base of the wall. You can actually see the mismatch in the virtual green screen illustration. This too will need to be fixed in post.

Additional Lighting - The LED wall wraps around the back and sides of the stage so it can only provide lighting from those directions. Most shots must have additional lighting from above and/or from the front. Some virtual sets will have another LED wall suspended above the stage to add skylight or overhead visuals to reflect off of shiny surfaces. Care must be taken, of course, to keep these additional lights off of the LED wall lest they fog up the black levels.

Latency - The moving camera position is fed to the clutch of Unreal Engines to render the environment at very high resolution in real-time. While the Unreal Engines can keep up with the camera's scan rate of 24 fps, there is a propagation delay between a camera move and the updated environment render. For The Mandalorian the latency was 8 frames, which is 1/3 of a second. This has to be carefully managed to avoid embarrassing moments like starting a whip pan where the talent pans immediately but the background pauses for 1/3 of a second. Of course, we can fix this in post too.

Proxy Environments - There are some situations where the VR environment is rendered at a lower resolution or with simplified geometry. The talent is filmed over this "proxy environment" then it is replaced later with a high resolution render with the same camera move. Since the talent was captured over the low res environment that means he will have to be isolated in order to comp him over the high res render in post.

Matching Renders - In those situations where CG elements are rendered externally using Renderman or V-Ray then added to a VR scene in post they obviously have to match the VR environment in the LED wall. But Renderman and V-Ray renders do not look exactly like an Unreal Engine render. Careful technical work must be done to get the two renders to match up perfectly or somebody will have to fix it in post.

Depth of Field - As we learned in the moiré section above the camera cannot be in sharp focus on the LED wall. While this is workable much of the time, there are situations where the wall needs to be just as sharply focused as the talent. In this situation the talent must be keyed and composited over a replacement render that is in sharp focus.

Bounce Light - Bounce light between on-set props and the LED wall must also be correct. A prop close to the wall would naturally bounce light onto nearby VR elements so the CG will have to be lit appropriately. Conversely, a VR element "close" to an on-set prop will naturally bounce light to it from the LED wall, but watch out for those metamers or we will have to fix it in post.

Classic FX - Even though the LED wall achieves a basic composite, there is still a long list of classic effects that will always need to be added by roto, paint, and prep artists. Glamour passes (digital beauty), skin cleanup, wire removal, and costume repair to name but a few. And they still need tons of tracking, roto, and paint for clean plates. Remember the proxy environments above? The talent will have to be roto'd and keyed to comp him over the replacement high res render.

Shadows - Shadows between the on-set props, talent, and the LED wall must also be consistent. If an on-set prop needs to cast a shadow that should appear in the LED wall then the CG will have to be amended to include it. Referring to the virtual production stage illustration at the top of this article, note where the sun is. The real sun is 92.3 million miles away so its light rays are parallel and so are its shadows. However, the VR sun is only a couple dozen feet away so its light rays diverge noticeably and as a result the shadows of the camera and on-set talent are not parallel.

Not sure how you would handle the talent walking around casting a moving shadow onto the LED wall without a talented compositor in post. The simplest solution is to avoid them altogether by restricting the shot composition to avoid creating them in the first place. But we all know how much directors like to be told to restrict their shots.

While virtual production offers exciting new vistas of creative freedom and in-camera effects, we have seen that upon close inspection it is a lot more complicated than it sounds. There are a great many details, restrictions, limitations, and issues that limit its applicability so many shots cannot be done this way. Further, many of these issues leave the in-camera shot incomplete or even incorrect which will then have to be fixed in post. We have even seen that in some cases they have to pop up a virtual green screen so we can key and comp as before. It is now clear that virtual production has a long list of issues that will still leave plenty of work for compositors.

Virtual production is a very important new technology for visual effects that is here to stay. But our industry's very foundation is rapid technological evolution and as visual effects artists, we have regularly met this challenge by staying abreast of these changes through education and training. Historically we have always risen to the challenge by developing new and indispensable roles to respond to these changes and this time will be no different.

Artist C.M. De La Vega shares his motion graphics/VFX playbook and how he helps coach artists looking to score creative touchdowns in After Effects.

How she built her career at Nebraska Public Media, why she turns to Continuum inside Avid on promos, and prepping for big projects.

Find out which effects she relies on most in her Avid-based workflow and why you shouldn't be afraid to experiment.

Be the first to know about new product releases, exclusive offers, artist profiles, tutorials, events, free products, and more! Sign up for our weekly newsletter.